Why AI Agents Failed - and the Practical Agent Pattern That Actually Works Today

For the last 18 months, AI agents have lived in the same fantasy space as flying cars and consumer jetpacks, promised constantly, demoed beautifully, and almost entirely absent in real-world productivity workflows.

Founders pitched it. Investors funded it. Operators dreamed about it.

And then came the reality check.

The early crop of agents face-planted on simple workflows. They got lost, confused, repetitive, or (my personal favorite) confidently executed with blunders that the least intelligent humans would never make. They didn’t understand business logic, context, or the nuance inside messy systems. They didn’t fail catastrophically...they just unimpressively disappointed.

The fantasy was autonomy. The reality was repeated babysitting before just doing the damn thing the old-fashioned way.

Why Most Agents Failed

We already know that LLMs aren't short on horsepower and impressive outputs. But it's got to be in the right environment.

Here’s where things went sideways:

Messy real-world systems

APIs (the classic handshake between systems) and MCPs (a newer standard for letting AI safely use tools) both introduce complexity that early agents weren’t built to handle. Fields conflict. Humans bend rules. Agents weren’t built for that kind of chaos.

No guardrails

Early agents were handed the equivalent of super-admin permissions and told “go nuts.” They did.

Over-trust from users

The interface looked confident, so people assumed the agent was competent. Bad assumption and bad outcomes.

Vendor incentives

Every platform wanted to claim “autonomous everything,” so they overreached. Big promises, minimal structure, and no safety rails.

Humans weren’t ready to give up control

Letting an AI “fire off emails on your behalf” sounds great in a keynote. It hits differently in a real inbox when your address book starts getting bombarded with AI copy-slop.

When people talked about agents, they imagined orchestration across multiple apps, tools, and business processes. An AI COO in your pocket. We are not there. Not even close.

Where Agents Actually Work Today

When you bounce out of the premature promise and dive into what works well today, something more grounded (and more valuable) emerges:

Agents work when:

The scope is narrow

The system of record is clean

The actions are reversible

The user stays in the loop (I know - a little ironic)

Not very flashy, but very practical.

Instead of “run my entire workflow,” the winning pattern looks more like:

“Draft, sort, or prepare the first 60% for me, and then I’ll finish it.”

Humans still make the decisions.

Agents handle the grunt work.

The Ecosystem Trap (and How People Fall Into It)

This is where the conversation needs a Thanksgiving-sized serving of honesty. Tools like Superhuman's email client deserve credit; they were early, and they showed the art of the possible for AI-driven inbox triage. But they also require something costly: moving your entire workflow into their world.

At the close of 2025, that’s a risky bet.

The rate of change is so fast that any killer feature today has a decent chance of being absorbed by Gmail, Outlook, or your LLM du jour tomorrow. I’ve learned the hard way that uprooting your entire ecosystem for one shiny capability is usually a mistake. You uproot everything… and two months later, the platform you left rolls out the same feature.

It's another can of worms, but we're seeing something similar with AI-first browsers. Yes, there are some really cool features, but are they worth giving up all of my Chrome extensions and sometimes basic functionality?

The smarter path is to layer, not migrate.

Experiment, don’t relocate.

Test, don’t re-platform.

Tread lightly. Try things. But avoid burning down your workflow unless you absolutely have to. You may need it again soon.

It reminds me a lot of the promise of home automation. I knew some people who went hog on all the automation infrastructure for their new home builds or remodels so that they could pretend to be The Jetsons. First off, none of it really worked that well. And then came all of the bolt-on tools like Alexa, HomeKit, and Google Home that worked just as well in a rented apartment as they did in a McMansion. It's a much more reasonable way to ease into a quickly evolving space

Real Agents that are Really Useful

Here’s the surprising twist: some of the truly useful, broadly accessible agents aren't living inside a fancy AI-native email client at all. They live in Gmail, plus a tiny bit of automation plumbing.

Gmail + Zapier + your LLM of choice isn't a bad place to extract some real agent utility.

If you’ve never used Zapier, think of it as the connective tissue of the internet: it links one tool to another without code. Its more powerful sibling, n8n, is open-source and adored by technical operators (often referred to as "n8n bros"). But Zapier is a decent gateway drug: fast, safe, and perfect for experimentation.

And now Zapier has “AI Actions,” which let you pair Gmail with any major LLM (ChatGPT, Claude, Gemini) and give it an actual job.

You can build a real agent - not a demo, not aspirational marketing - in about 15 minutes.

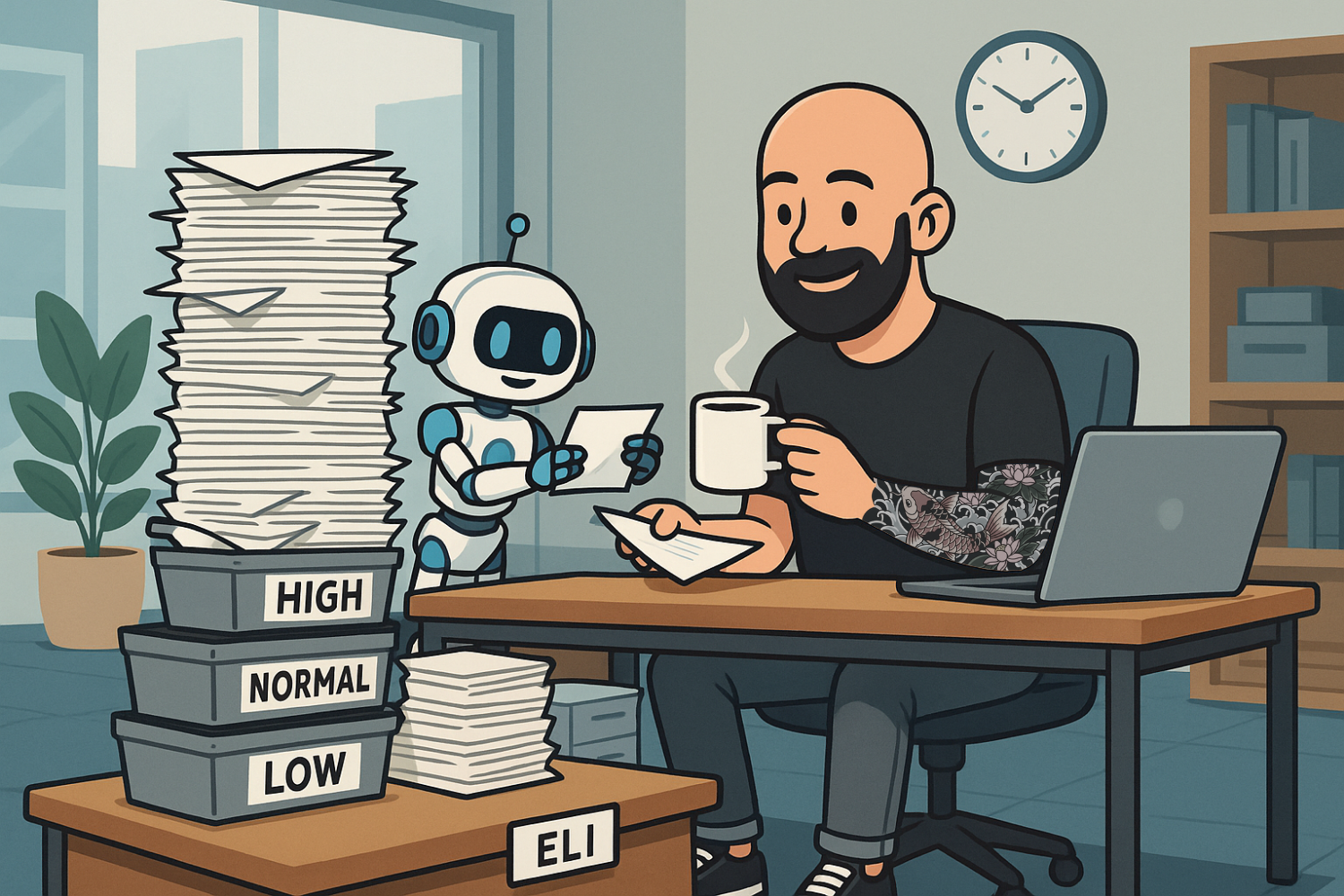

The Inbox Triage Agent

Here’s the version of an agent that works today: it watches your Gmail, sorts new emails by importance, drafts replies for the ones that matter, and never sends anything without you.

No big ecosystem shift. No risk. Just a smarter first pass on your inbox.

How It Works (in human terms)

1. A new email hits your Gmail inbox.

2. Zapier hands that email to your chosen LLM (ChatGPT, Claude, Gemini).

3. The LLM reads it and decides:

- Is this High, Normal, or Low priority?

- Does it deserve a reply?

- If yes, what’s a good draft response?

4. Zapier then:

- Applies a Gmail label like “AI–High”

- Saves the draft reply inside Gmail

5. You skim, edit, and send.

The agent handles the grunt work. You stay in control.

How to Set It Up (the simple version)

You don’t need to code or configure anything complicated. You’re just telling the LLLM how you want it to behave.

1. Create your priority rules inside the Zap

When Zapier asks what instructions to give the LLM, you paste in a short description of what “High,” “Normal,” and “Low” mean. It’s just text in the prompt box; nothing more.

2. Connect Gmail

Zapier uses your permission to watch for new emails. Two clicks.

3. Connect your LLM of choice

Pick the brain you want the agent to use.

4. Build the flow

- Trigger: New email in Gmail

- Step 1: Send the email text + your rules to the LLM

- Step 2: Apply a Gmail label based on the LLM’s output

- Step 3: Save the draft reply the LLM wrote

5. Test it on 10 recent emails

You’ll immediately see where your rules were too vague or too strict. Tweak them once or twice until the drafts feel like something you’d actually send.

After that, the agent runs in the background like the quiet, boring, but consistent helper that it is. (exactly how the first generation of agents should behave).

End result:

A real agent.

A cleaner inbox.

A calmer mind.

Finding the Entry Points for Agentic AI

Email triage is universal.

It’s painful. For some, it's the bane of their knowledge-worker existence.

And the promise to fix it all has been “just around the corner” for a decade.

But it also happens to be the perfect proving ground for the modern agent pattern: bounded autonomy, human approval, tool use, and repeatable structure.

If you understand this, you understand where agents are really heading. Not towards “run my whole life,” but “take the first pass, so I can do the part that matters.”

That’s the real promise.

Not automation, but acceleration.

Find your next edge,

Eli

Want help applying this to your product or strategy? We’re ready when you are → Let's get started.