Something important happened this week in AI policy, and most people barely noticed.

A quiet but important thing happened this week in AI policy and governance.

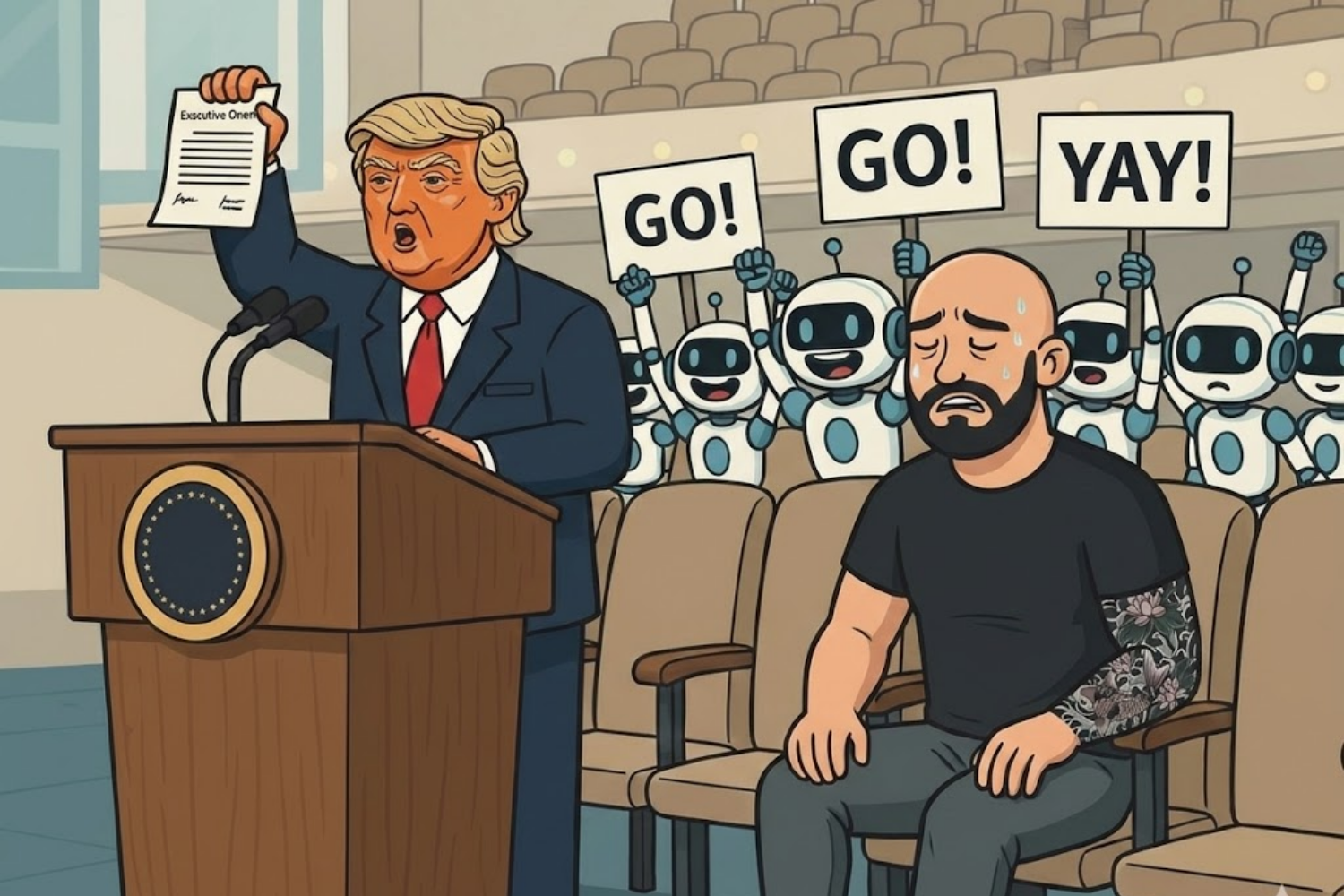

The White House issued an executive order aimed at eliminating state-level barriers to a unified national AI policy. You can read it as a pro-innovation move, a power grab, or a necessary response to global competition (and by global, everyone knows it really means China). Reasonable people will land in different places. As somebody who might have more insight than the average citizen, I feel conflicted. Very conflicted.

But feelings aside, here’s the reality of how this will affect the way we all work:

AI governance is accelerating upward and outward at the same time. Faster decisions. Fewer local exceptions. Less patience for fragmentation.

And most people and organizations are nowhere near ready for what that actually means.

Not because they lack tools. Not because they lack ambition.

But because they’ve never clarified how AI fits into who they are.

This moment isn’t really about regulation as much as it's about exposure.

When rules centralize and speed increases, the advantage doesn’t go to the most compliant companies.

It goes to the ones that are already decision-ready.

Why this order exists - and why that should give us pause

Let’s be clear-eyed about the motivation behind this order.

You'll hear things like consumer protection, innovation or administrative efficiency. But at the core, this is about geopolitical competition. Specifically, the belief that the United States cannot afford a fragmented AI landscape while China moves with centralized coordination, massive state backing, and very few ethical constraints.

From that lens, the logic is straightforward:

Speed matters.

Scale matters.

Falling behind is not an option.

The danger is not that this logic is irrational. But it's very incomplete.

Centralization optimizes for velocity. But here's the catch: it in no way automatically optimizes for wisdom, safety, or long-term resilience. When everything is framed as a race, nuance becomes friction. Local context becomes inconvenient. Human consequences become externalities.

History is littered with technologies that advanced faster than the social systems meant to absorb them. But this one? It's moving faster than anything humanity has ever experienced.

So yes, this order may help the U.S. compete.

It may also encourage a version of AI development that outruns our ability to govern it thoughtfully inside organizations and lives (best case). Or control it at all (worst case).

But let's bring this out of Washington and straight into the workplace, where we have a little more agency.

Why this matters more than the headlines suggest

Most AI conversations still revolve around surface-level questions:

Which tool?

Which vendor?

Which department owns it?

Those are easy questions. They feel productive. They also miss the point.

Policy acceleration does one uncomfortable thing exceptionally well: it removes your ability to stall.

You can no longer wait for perfect clarity.

You can no longer hide behind “we’re still exploring.”

And you definitely can’t outsource your thinking to whatever LinkedIn thread went viral this week.

AI policy volatility raises the cost of indecision. And indecision has a very human side effect: anxiety.

Individuals don’t experience AI shifts as an abstract strategy.

They experience them as threats to competence, relevance, and control.

If leadership doesn’t provide a narrative, employees will write their own. And those stories are almost always darker than reality.

Changing the frame from AI-first to decision-ready

Here’s the reframe worth sitting with:

Prepared organizations are not the ones doing the most with AI.

They’re the ones who have already done the internal thinking AI now forces.

You don’t need a policy memo to get started.

You need posture.

A simple way to assess readiness is to ask three questions. Not rhetorically. Actually answer them.

FIRST: Do we know where AI actually touches value in our business?

Not where AI could be used.

Not where a vendor demo looks impressive.

Where does value get created, protected, or lost today?

Where are decisions slow, manual, or inconsistent?

Where does human judgment truly matter?

Where would errors be expensive, not just inconvenient?

AI amplifies whatever already exists. If your processes are fragile or poorly understood, automation won’t fix that. It will expose it.

Prepared companies can articulate where experimentation is safe and where it’s dangerous. Everyone else is just guessing.

SECOND: Have we decided what we are optimizing for?

Most organizations say they want speed, safety, and leverage.

Prepared ones choose.

Speed means learning in public and accepting mess.

Safety means documentation, constraint, and patience.

Leverage means multiplying your best people, not replacing average ones.

There is no universally correct choice. There is only the cost of pretending you can have all three at once.

This executive order signals one thing clearly:

AI adoption pressure will increase regardless of organizational comfort.

Indecision becomes the riskiest position to take. Choosing imperfectly beats waiting perfectly.

THIRD: Do our people understand the intent behind our AI decisions?

This is the part almost everyone skips. People don’t resist AI because they hate technology (well, maybe a few).

They resist it because uncertainty feels personal.

Prepared leaders answer, repeatedly and plainly:

What AI is for here.

What it is not for.

What will still require humans in the loop vs in the center.

The vacuum of silence often gets filled with fear. Especially when policy headlines make it feel like decisions are happening far away and fast.

But the action of narrative control can return a sense of where people fit into these new directions.

If your team cannot explain in one sentence why AI is being used in your organization, trust is already an issue.

The individual layer that most leaders overlook

Let's zoom out one more level. The same forces reshaping organizations are reshaping careers.

Policy may determine the speed of AI development, but literacy determines who benefits from it.

AI literacy does not mean knowing how to prompt better than your peers. It means understanding:

What these systems are good at.

What they are terrible at.

Where they are ill-fitted, hallucinate, or mislead.

How human judgment still fits in the loop (important!).

The safest individuals in an accelerated AI world are not the most technical. They are the most fluent.

Fluent enough to ask better questions.

Fluent enough to sense when automation is masking bad thinking.

Fluent enough to use AI as leverage rather than surrendering agency to it.

Waiting for regulation to settle before engaging with AI is the individual version of corporate indecision. It may feel like strategic prioritization, but the harsh reality is that this is incredibly risky.

The uncomfortable truth this moment reveals

This executive order doesn't force anyone to adopt AI faster tomorrow.

But it removes the illusion that waiting is a strategy.

Some companies will interpret this moment as a compliance exercise, or the ultimate smack-down between state and federal law.

But the sharpest ones will see it as permission to finally answer questions they've been avoiding.

Some individuals will stay spectators. Others will build literacy, judgment, and leverage while the rules are still being written.

Those are VERY different futures.

If regulation disappeared tomorrow, would your AI strategy still make sense?

If the tools changed overnight, would your intent remain intact?

If your people asked "why are we doing this," could you answer without pointing to a rollout timeline or license count?

The winners won't be the loudest or the fastest. They'll be the ones who know exactly what they're doing and why.

Clarity isn't something an executive order can mandate. But it's the only sustainable advantage left.

Find your next edge,

Eli

Want help applying this to your product or strategy? We’re ready when you are → Let's get started.