The Lazy AI Trap: Why Artificial Intelligence Scales Your Strengths, and Your Flaws

AI Scales Whatever You Already Are

AI is a lot like alcohol, it doesn’t change who you are; it amplifies it. Every leadership team I meet wants the same thing from artificial intelligence: more speed, less cost, and better results. But here’s what most miss, AI doesn’t fix weak teams or broken systems; it scales them. If your processes are unclear, your data messy, or your standards low, AI will multiply those flaws at machine speed.

In the world I operate in, AI will not replace the writer, the designer, or the developer. It will make them 10x more effective. The catch is quality control. One capability AI does not have right now is taste. Taste is judgment under constraints. It is the human ability to say good versus bad, push this harder versus start over. Without taste, you are shipping acceptable, not excellent.

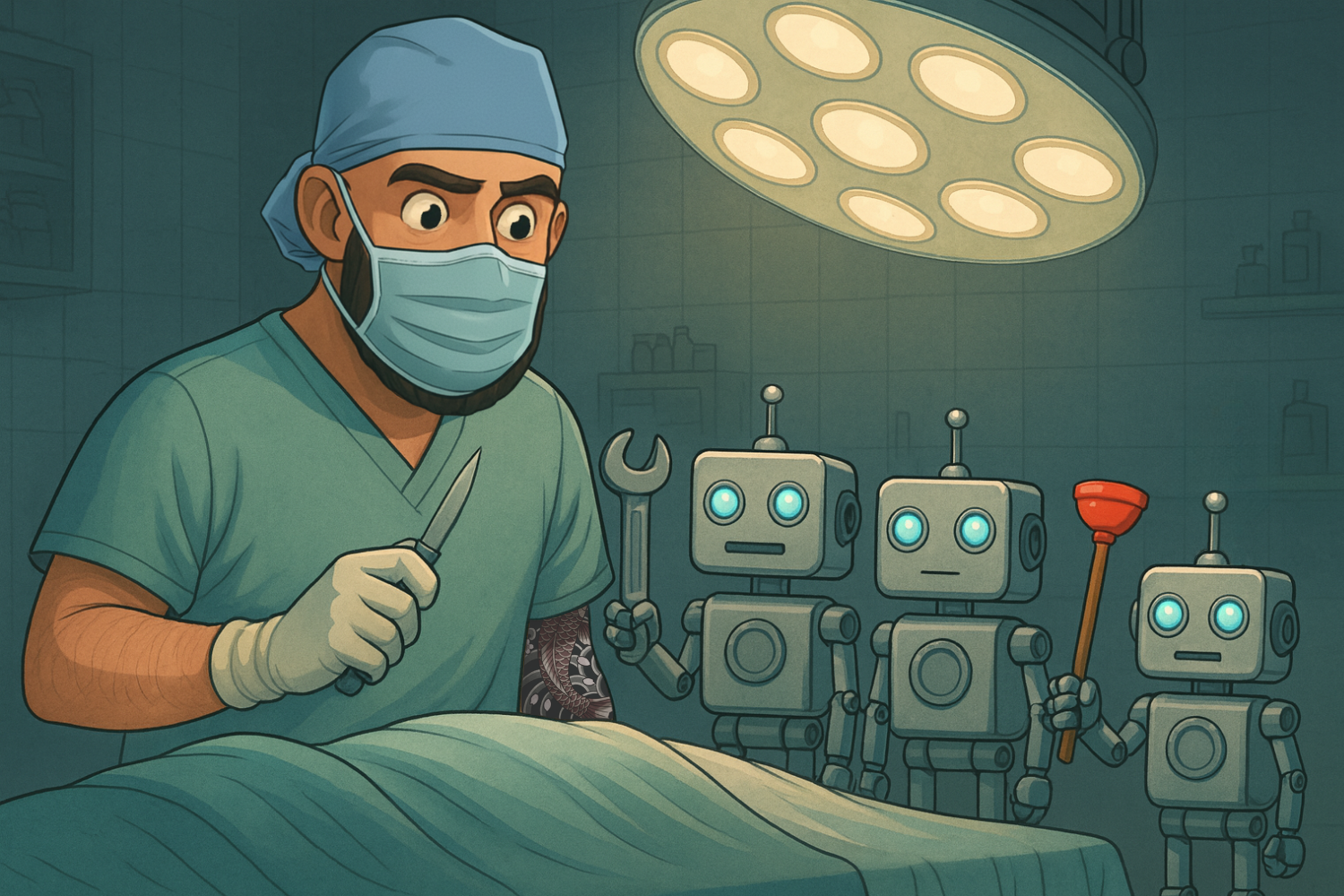

Put differently: AI is a force multiplier. In the hands of a talented surgeon, a scalpel saves a life during surgery. In the hands of a plumber during surgery...not so much. Tools only matter in proportion to the judgment behind them.

When “Looks Great” Isn’t Good

We see this weekly. Someone with little design experience uses AI to generate a homepage. The first response is often, “Looks great.” It’s clean. There are gradients. Icons are aligned. But a senior designer can immediately tell when the hierarchy is off, the type pairing fights the brand, the visual rhythm is flat, and the hero copy fails the job to be done.

That delta is taste. Not snobbery. Don't confuse this for vibes. Taste is a trained pattern library plus a standard for outcomes. The writer with taste knows when a paragraph is technically correct but emotionally empty. The developer with taste knows a solution works, but the tradeoffs will cause downstream pain (or "why" when it doesn't work. The designer with taste knows the composition is pretty but communicates the wrong priority.

This is why “just give the prompt to AI” routinely disappoints. If the operator cannot judge the output, they cannot iterate. If they cannot iterate, they cannot improve. The result is a wall of mediocre assets that are hard to defend and even harder to ship with confidence.

Five Moves To Keep You Out Of The Trap

1) Run the AI Fit Test before you automate

Decision density. Are many small decisions required, or just a few big ones? High decision density needs human taste in the loop.

Repeatability. Is the task repeatable enough to learn from feedback quickly?

Data readiness. Do you actually have clean examples of “what good looks like”?

Error tolerance. If it is wrong, what is the cost? High-cost tasks demand human signoff.

2) Install a Taste Gate

Create a simple rubric that defines quality for the task:

Purpose. What must this asset achieve in the real world?

Success criteria. 3 to 5 binary checks. For design, that might be visual hierarchy, legibility, brand voice alignment, and accessibility basics.

Reference set. 5 examples of “good” and 3 of “not good,” with one-line reasons.

Assign a single taste owner per domain who signs off on output before it goes live.

3) Write prompts like job descriptions, not wishes

Give AI what a great contractor would need:

Context. Audience, channel, constraints, and success criteria.

Inputs. Brand guidelines, examples to match or avoid, required components.

Process. Ask for 3 versions, each with different approaches. Request a rationale for choices.

Review. Tell the model to critique its own output against the rubric before you see it.

4) Calibrate with comparisons, not opinions

Always compare AI output against two anchors:

A baseline. Your current best example. If AI cannot beat it, do not ship it.

A benchmark. A top-tier external example. Ask, “What would have to be true for ours to compete with this?” Use that gap to guide iteration.

5) Build a Taste Ladder inside your team

Taste is trainable. Here's how to make it a habit, not a hope.

Weekly 30-minute Taste Review. One domain per week. Pull 5 pieces of work. Score against the rubric. Discuss tradeoffs out loud.

Critique with constraints. “If we had to ship in 24 hours, what is the smallest change that drives the biggest improvement?”

Library of learning. Save before and afters with one-line lessons. This becomes your institutional pattern library.

Keep a Talented Human in the Loop

Not just in the loop, but really running the show. AI is only as sharp as the person guiding it. Every system needs a domain expert who brings both taste and time-earned intuition. It's the kind that only comes from years of grinding out the work before automating it. This person isn’t a “prompt engineer”; they’re a craft translator that bridges what the machine can do with what excellence actually looks like.

Here’s how to do it well:

Assign a “Taste Steward” per function.

For each domain (design, writing, dev, ops, CX), designate one person responsible for curating what “good” means. Their job isn’t to make everything themselves, but to ensure the AI learns from the right examples.

Choose experience over enthusiasm.

The person teaching the machine should be someone who’s lived through bad decisions, mediocre output, and the slow climb toward mastery. You want the person who’s been burned enough times to know why something looks or sounds wrong.

Use them to create “Taste Checkpoints.”

Before output goes live, have this expert review both the human and AI-generated work side by side. The goal is calibration: making sure the machine learns what excellence feels like.

Document their intuition.

Have them narrate why they make the decisions they do in plain language. These become training assets, style guides, or even prompt templates that distill years of expertise into teachable heuristics and custom models.

Protect their focus.

The biggest waste of a senior expert is burying them in admin or review queues. Let them focus on shaping the system, not cleaning up its messes. Their time should go to refining the loop as opposed to repeating it.

Tool spotlight

For design: maintain a living style guide, component gallery, and complete designs that AI can reference.

For writing: keep a voice charter with word choice rules, banned phrases, and before/after pairs.

For dev: keep a set of “golden paths” and code snippets that reflect preferred patterns.

For customer experience: Store transcripts and annotated examples of excellent vs. poor customer interactions. AI learns from data; humans decide which tone earns trust.

For leadership communications: Build a “decision narrative library." One-pagers explaining past choices and their tradeoffs. AI can summarize outcomes, but only humans with taste can recognize which decisions actually built credibility.

Try this question

Before using AI on any creative task, ask: “Who here has the taste to decide if the output is good enough to ship, and what are they using to make that call?” If you do not have a clear answer, you are not ready to automate.

The Edge You Cannot Automate

The market is about to be flooded with work that is fine. That is your opportunity. AI will make mediocrity effortless. Taste + AI makes excellence inevitable. The winning teams are not the ones with the most tools. They are the ones with the clearest standards, the fastest feedback loops, and the courage to say, “Start over. This is not good enough yet.”

If you fix your system and protect your taste, AI becomes the best hire you can make this year. If you do not, you will just get bad faster.

Find your next edge,

Eli

Want help applying this to your product or strategy? We’re ready when you are → Let's get started.